As the Arclight Symposium was a meeting ground for media historians with varying degrees of familiarity with the digital humanities, a few concepts caught the attention of participants, including myself, who are still learning the nuances of digital tools, methods, and concepts. In this article, I will survey some digital humanities terms and introduce a few new concepts presented during the symposium.

Disambiguation

During one of the coffee breaks, I joined a discussion of the term “disambiguation,” in which we grappled with its meaning. The concept had come up a few times in the morning session. For example, in her presentation “Station IDs: Making Local Markets Meaningful,” Kit Hughes (University of Wisconsin-Madison) reported that radio station call letters provide an excellent source of data for Scaled Entity Search (SES), as they are already disambiguated. In her talk on the final day, “Formats and Film Studies: Using Digital Tools to Understand the Dynamics of Portable Cinema,” Haidee Wasson (Concordia University) quipped that she was “disarticulating” the cinematic apparatus, not “disambiguating.” So, what does it mean exactly to disambiguate data in a digital humanities context?

Sometimes referred to as word sense disambiguation or text disambiguation, disambiguation is “the act of interpreting an author’s intended use of a word that has multiple meanings or spellings” (Rouse). In digital humanities research, the process of disambiguation is usually achieved by algorithmic means, and is especially important when using digital search tools, as it helps weed out any false positive search results that may skew the data. Describing word sense disambiguation in “Preparation and Analysis of Linguistic Corpora,” Nancy Ide explains how the most common approach is statistics-based (297). She elaborates:

In word sense disambiguation, for example, statistics are gathered reflecting the degree to which other words are likely to appear in the context of some previously sense-tagged word in a corpus. These statistics are then used to disambiguate occurrences of that word in an untagged corpora, by computing the overlap between the unseen context and the context in which the word was seen in a known sense. (300)

For more detailed information about disambiguation, refer to A Companion to Digital Humanities edited by Susan Chreibman, Ray Siemens, and John Unsworth; “Exploring Entity Recognition and Disambiguation for Cultural Heritage Collections” by Seth van Hooland, Max De Wilde, Ruben Verborgh, Thomas Steiner, and Rik Van de Walle; or “Computational Linguistics and Classical Lexicography” by David Bamman and Gregory Crane.

OCR

Several presenters made comments about the quality of the OCR of certain documents. Speakers remarked that some documents had very good OCR, whereas others had terrible OCR. Although I ascertained that OCR had something to do with transferring a material document into a digital copy, I made a note to myself to learn more about this concept later.

As it turns out, OCR is an acronym for “optical character recognition,” and involves scanning an image of a text document and converting it into a digital form that can be manipulated. In other words, it is “the technology of scanning an image of a word and recognizing it digitally as that word” (Sullivan). The Google Drive app, for example, allows users to “convert images with text into text documents using automated computer algorithms. Images can be processed individually (.jpg, .png, and .gif files) or in multi-page PDF documents (.pdf)” (Google). When a document is said to have “bad OCR,” this indicates that the computer algorithm misrecognized many words or segments of texts during the OCR process, resulting in misspellings, inaccurate spacing, and outright non-recognition.

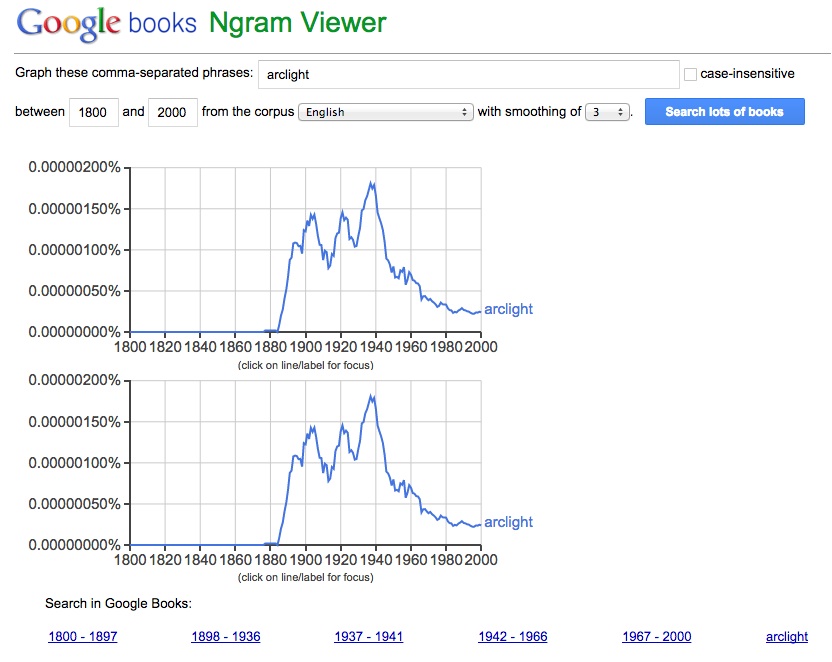

Launched in 2010, Google’s Ngram viewer is a tool that works alongside Google Books to let users track the popularity of words and phrases over time through their reappearance in various books. Users can enter the phrase or word they want to search, the time frame, and the corpus (e.g. English, Hebrew, Chinese, French, etc.), and the viewer charts the results on a graph.

In his article “When OCR Goes Bad: Google’s Ngram Viewer & the F-Word,” Danny Sullivan investigates the issue of OCR errors in connection to the Ngram viewer. Due to the misrecognition of the medial “s” as an “f” in several books in Google’s Ngram Viewer, the word “suck” appears as a different, rather inappropriate, word instead. In response to this clear demonstration of bad OCR, Sullivan cautions users that “the Ngram viewer needs to be taken with a huge grain of salt” and requires additional, in-depth analysis. The potential for bad OCR points to the limits of over-relying on machine reading and reinforces the importance of shifting from distant to close reading in order to avoid misleading conclusions.

Listicle

Although it may not be a digital humanities term, listicle was a term I had not heard before, despite my constant bombardment of listicles on a near daily basis from websites like BuzzFeed. A combination of the words “list” and “article,” listicle is a “short-form of writing that uses a list as its thematic structure” (McKenzie). In his talk “Listicles, Vignettes, and Squibs: The Biographical Challenges of Mass History,” Ryan Cordell (Northeastern University) emphasized that the listicle is not a new phenomenon, but rather can be traced back to the appearance of numbered lists in nineteenth-century newspapers, such as “15 Great Mistake People Make in their Lives.” I would not be surprised to see a similar list on BuzzFeed.com, whose listicles include: “23 Reasons You Should Definitely Eat the Yolk” or “31 Ways to Throw the Ultimate Harry Potter Birthday Party.”

I often find myself clicking on such links knowing the listicle will be a short, easy, and entertaining read. There is little doubt that the rising popularity of the listicle and other short forms of writing online relates to the pressure to maintain a high number of website hits. The notion of the listicle prompts me to question the implications of this short form of writing and the pressure it generates for both online journalists and academics to produce increasingly brief, easily consumable forms of writing, akin to that of a sound bite, in an environment where there are constant battles for the attention of readers. With the growing use of Twitter, another type of micro-blogging, among digital humanities scholars we need to consider what these instantaneous, bite-size forms of writing might also mean for scholarship and the ways in which we reach audiences.

Soundwork

In her presentation “The Lost Critical History of Radio,” Michele Hilmes (University of Wisconsin-Madison) introduced the term soundwork to describe works that derive from the techniques and styles pioneered from radio. For Hilmes, soundwork refers to creative works in sound – whether documentary, drama, or performance – that utilize elements closely associated with radio across a variety of platforms. She claimed that while cinema’s critical history is now firmly established, the study of radio has been neglected and as a result, it lacks its own critical history. In this respect the outlook for soundwork is encouraging, as cinema once occupied the place that soundwork does today.

Middle-range reading

As mentioned in my last article, Sandra Gabriele (Concordia University) and Paul Moore (Ryerson University) advanced the concept of “middle-range reading” in their presentation “Middle-Range Reading: Will Future Media Historians Have a Choice?” Proposing middle-range reading as a method to investigate the material roots of media, form, and genre, they argued that both close and distant reading lose sight of materiality, embodied practices like reading, and the politics connecting interpretive communities to the historic reader.

The development of the concept of middle-range reading arises as a response to the concern that distant (as well as close) reading will lead to the neglect of materiality and embodied practices. In my article “Three Myths of Distant Reading,” I call attention to similar myths that surround the concept of distant reading, countering with Matthew Jockers’s assertion that “it is the exact interplay between macro and micro scale that promises a new, enhanced, and perhaps even better understanding of the literary record.” What I believe Gabriele and Moore are attempting to call attention to is the need to address various scales of analysis and avoid privileging one (i.e. distant) over another (i.e. close), but find a middle ground between the two. In this way, middle-range reading functions to balance the close and the distant and recognizes the slipperiness and fluidity of different scales of analysis.

Analogue humanities

In her talk, Wasson reinforced the importance of “the analogue humanities” in an increasingly digital environment. Arguing for a multi-modal approach to media history research, she recommended that although the digital must be integrated – indeed, has already been integrated – it should be a part of a larger process and project, one driven by conceptual exploration rather than mechanical capabilities.

A common thread through many presentations at the Arclight Symposium was the need for balance in utilizing digital tools and methods. A misperception among those new to digital humanities is the idea that digital tools radically alter the research process. Alternatively, digital methods should be understood as additional tools in our methodological toolkit. What became clear from a number of symposium discussions was the importance of keeping the bigger picture in mind, remembering our media history roots, and utilizing digital tools and focusing on various scales of analysis to guide our research. In other words, as Wasson suggested at the close of her presentation, we must “recall the long view to slow research.”

Works Cited

Bamman, David, and Gregory Crane. “Computational Linguistics and Classical Lexicography.” Digital Humanities Quarterly 3.1 (2009): n. pag.

Fillmore-Handlon, Charlotte. “Three Myths of Distant Reading.” Project Arclight. 29 Jan 2015. Web. 14 July 2015.

Google. “About Optical Character Recognition in Google Drive.” Drive Help. Web. 14 June 2015.

Google. “Google Books Ngram Viewer.” 2013. Web. 14 June 2015.

Ide, Nancy. “Preparation and Analysis of Linguistic Corpora.” A Companion to Digital Humanities. Eds. Susan Chreibman, Ray Siemens, John Unsworth. Malden: Blackwell Publishing, 2004. 289-305.

Jockers, Matthew. “On Distant Reading and Macroanalysis.” Author’s Blog. 1 July 2011. n. pag.

McKenzie, Nyree. “What Are Listicles and 5 Reasons to Use Them – Thought Bubble.” Thought Bubble. 27 Feb. 2015. Web. 14 June 2015.

Sullivan, Danny. “When OCR Goes Bad: Google’s Ngram Viewer & the F-Word.” Search Engine Land. 19 Dec 2010. Web. 15 June 2015.

Rouse, Margaret. “Disambiguation Definition.” 2005-2015. Web. 15 June 2015.

van Hooland, Seth, Max De Wilde, Ruben Verborgh, Thomas Steiner, and Rik Van de Walle. “Exploring Entity Recognition and Disambiguation for Cultural Heritage Collections. Digital Scholarship in the Humanities. 30 November 2013. Web. 14 June 2015.