At the 2014 Film & History Conference, Kit Hughes, Derek Long, Tony Tran, and I had the opportunity to lead an hour-long workshop titled “Historical Illuminations via Digital Tools: The Media History Digital Library, Project Arclight, and a Golden Age for Film History Research.”

Halfway through the workshop, we split off into small groups focused on particular methods of digital research. Derek talked about Lantern and search. Kit and Tony talked about Arclight’s new data mining method of Scaled Entity Search. I led a small group that wanted to learn more about topic modeling.

Due to our limited time, I had to generate the topic models on my own laptop and move quickly through the demo. However, many of the workshop participants expressed interest in experimenting more with topic modeling on their own. This post is written for those participants — and anyone else out there on the web — who wants to try topic modeling magazines from the Media History Digital Library.

A few suggestions up front — both conceptual and technical:

First, the conceptual suggestions: take a look at some of the excellent Digital Humanities scholarship that explains the process of topic modeling, discusses its value, and addresses strengths and weaknesses. I highly recommend two books by Matthew L. Jockers — Macroanalysis: Digital Methods and Literary History (2013) and Text Analysis with R for Students of Literature (2013). I also recommend reading Ben Schmidt’s critique of topic modeling, “Words Alone: Dismantling Topic Models in the Humanities” (2012).

To give us a working definition of topic modeling — and in the interest of everyone’s time, including my own — I’ll recycle the description of topic modeling I provided in my 2014 Film History article, “Lenses for Lantern”:

The algorithm that powers topic modeling—latent Dirichlet allocation (LDA)—is extremely complicated, and lengthy articles have been written to explain it. For the sake of brevity, we can turn to Jockers, who offers the best two-sentence explanation I have been able to find about how the process works: “This algorithm, LDA, derives word clusters using a generative statistical process that begins by assuming that each document in a collection of documents is constructed from a mix of some set of possible topics. The model then assigns high probabilities to words and sets of words that tend to co-occur in multiple contexts across the corpus.” Essentially, you tell your computer to analyze a group of documents, you tell it how many topics you think are present in the documents, and you provide the computer with a “stop list” of words that you want it to ignore. (At the very least, a stop list should include common articles, such as a, and, the, etc.) The computer then returns word clusters that, hopefully, you can interpret as representing topics.

Everyone on board?

If so, let’s move on to the more technical suggestions/instructions. Before you can run your first topic modeling, you’ll need to:

- Download and install R and R Studio to your computer.

- Download the file workspace.zip, unzip it, and move it into a new folder on your computer. This is where you will do your modeling work. Do not try to save the workspace folder and perform your modeling work from your Desktop.

- Develop some comfort and confidence working with R from the command line (not 100% necessary, but it sure helps when you get your first error message and you are confused about how to proceed).

- Select a magazine volume from the Media History Digital Library that you want to topic model. Two sample text files are included in the workspace folder: Modern Screen (1937) and New Movie Magazine (1931). The R script will look for the em>Modern Screen file, though you can substitute a different file. You’ll want to download the TXT file of the magazine, which you can do by clicking “IA Page,” then “All Files: HTTPS”, then saving the file that ends “_djvu.txt”.

Ok, so now how to generate the models…

- Open R Studio and go to “Misc” > “Change Working Directory.” Set your working directory as the unzipped “workspace” folder.

- Open the file “topic_model_r_script.txt” and read it over. Focus especially on the comments — those lines that begin with a #.

- Make a decision about whether you want to use the “modernscreen_1937.txt” file (which corresponds to this magazine volume), or whether you want to select a different text file. Again, I’m biased in favor of the online material that’s part of the Media History Digital Library, but this process should work for most other text files too. You just need to modify this line of code to change what you are analyzing: “text <- scan(“modernscreen_1937.txt”, what = “character”, sep = “\n”) #enter desired file here. we’re using the 1937 volume of the fan magazine.

- Make a decision about whether you want to add or remove any words in the “stop_list.txt” file. Your topic models will ignore any words on that list. Conjunctions, articles, and common first names are generally included in the list. For analyzing magazines, I also found that adding months to the stop list.

- Now copy & paste the code into R Studio and press enter. Give it a whirl. If it works, keep experimenting by setting the K variable (number of topics) at different numbers and by visualizing different topics as word clouds (which you control by changing “i <- 5” to “1 <- 3” or some other number).

What did your topic models tell you about the magazine?

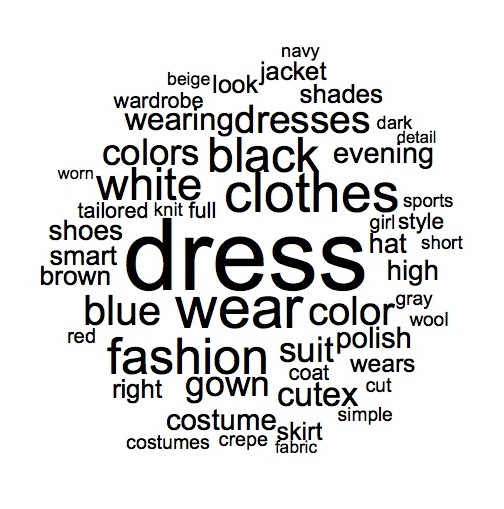

In the case of Modern Screen, we generated some topics that perfectly fit our expectations — like this topic focused on dresses and fashion:

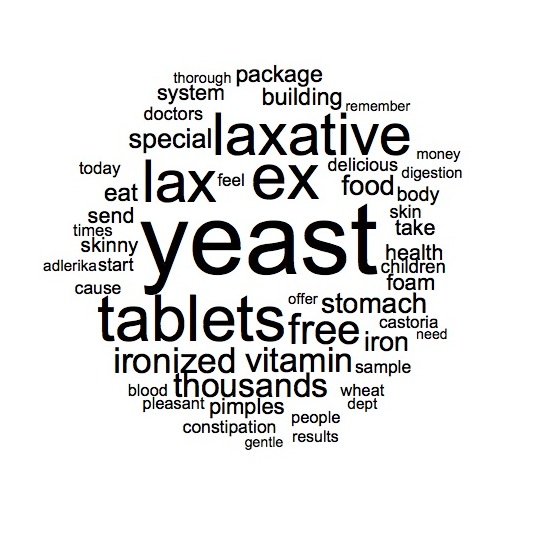

But we also found some topics that we weren’t expecting — like this one that featured the words “yeast” and “laxatives” prominently.

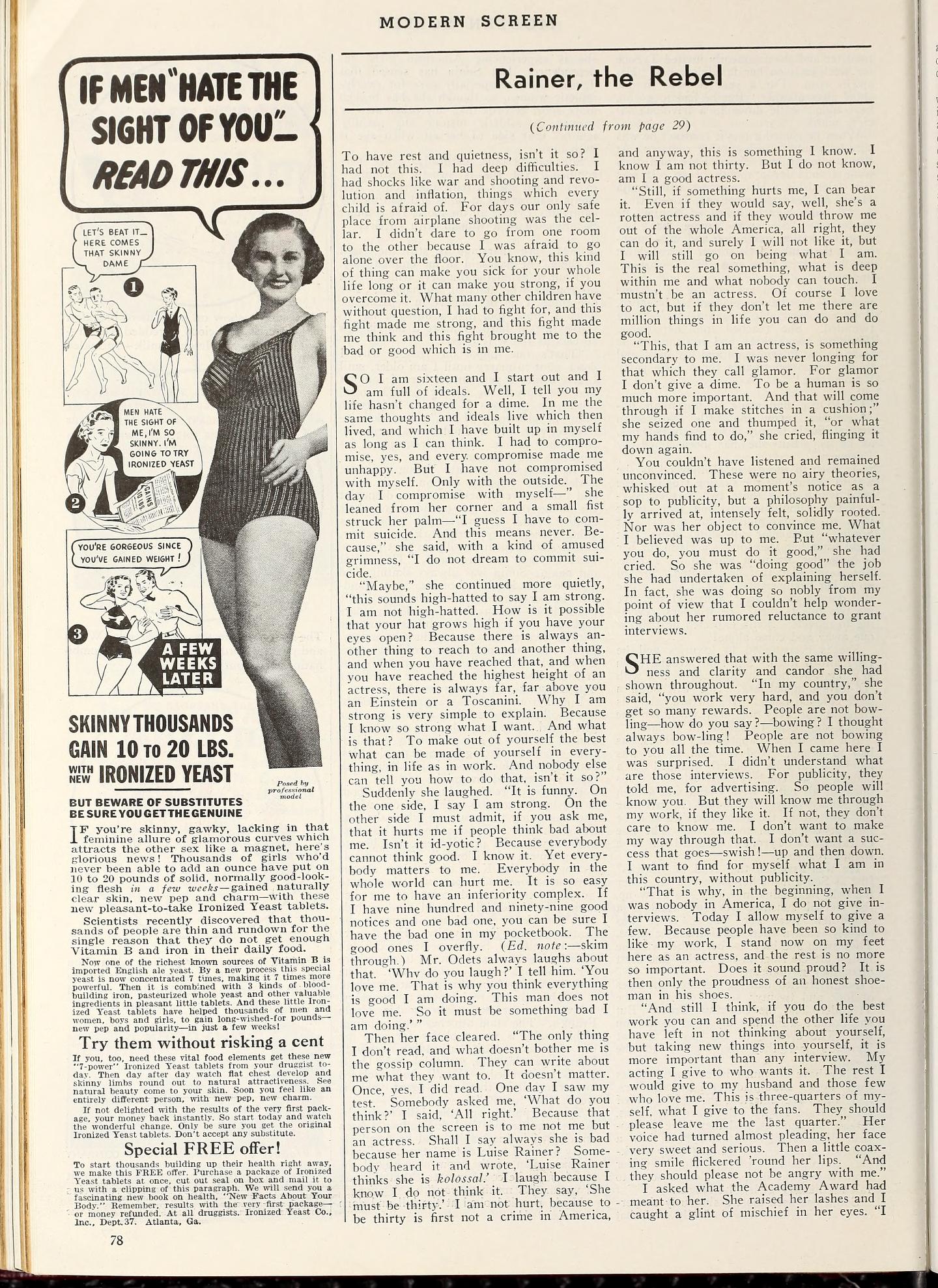

We guessed that this topic had to do with the re-occurrences of particular word clusters in the advertisements of Modern Screen. I checked the magazine, and, sure enough, our assumption proved correct. But the nature of some of these ads defied our 21st century expectations. The yeast that so prominently rose (pardon the pun!) derived from a series of advertisements from the Ironized Yeast Company of Atlanta, Georgia. In ads such as those featured at the bottom this post, the Ironized Yeast Co. promised female readers that they would look more attractive if they took yeast tablets and gained weight. These ads suggest that while the idealized body type has changed over the last 80 years, the practice of advertisers exploiting our insecurities about how our bodies appear has not changed.

This example also shows how topic modeling is not simply about “distant reading.” What I’ve found is that topic modeling is most valuable when paired with close reading and browsing.

When I initially read through Modern Screen, I noticed the articles about celebrities, but not the Ironized Yeast Company’s advertisements. When my computer read Modern Screen, it brought the word “yeast” to my attention. And when I went back to read the occurrences of that word, it left me with a new series of questions about 1930s culture.

Topic modeling is one part of an iterative process that generates new questions, knowledge, and ideas.